[U51] Tesla Full-Self Driving (Supervised)

Millions of electric vehicles are training autonomous navigation with human operators

Dear Readers,

In this Update for Product | Strategy | Innovation I will share my personal experience and opinions regarding the latest versions of Tesla Full-Self Driving, also known as FSD (Supervised) v12. I was not an early adopter of Tesla FSD even though earlier versions were available for me to beta test. I would keep up with improvements others reported, but I didn’t use earlier versions except for more basic autopilot features on highway trips.

But my interest changed when FSD (Supervised) v11.4 was enabled earlier this year on my Tesla Model Y. I wanted to verify the reports of superior performance with the evolution of Tesla’s computer vision-only, end-to-end, in-vehicle neural network and massive computing infrastructure to rapidly train and release improved versions every few weeks with an over-the-air software update to the vehicle.

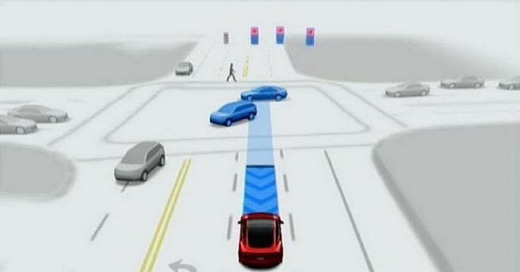

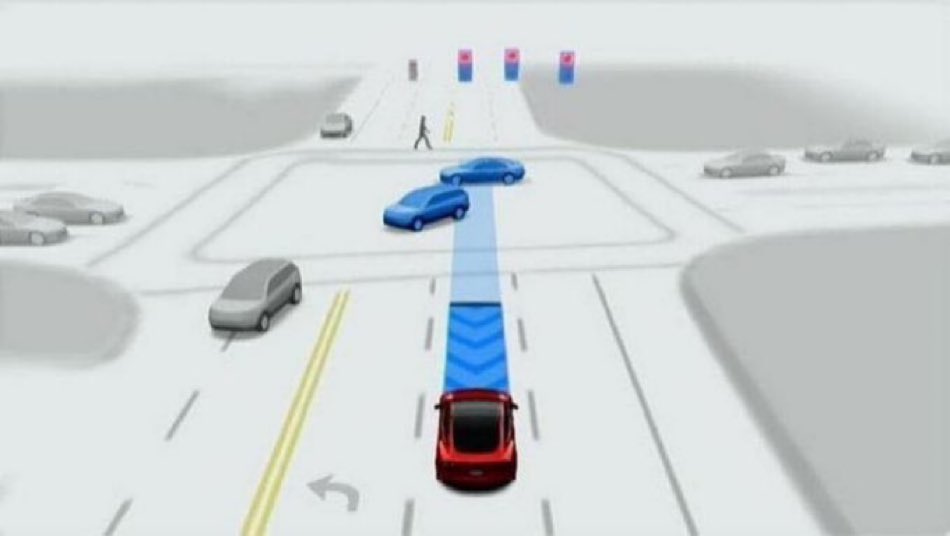

Activating FSD (Supervised) is as easy as a downward motion on the right steering wheel stalk. An audio tone acknowledges FSD (Supervised) is active. The navigation screen also shows the planned path of the vehicle in blue with FSD (Supervised) vs. grey when you are operating the vehicle manually. While FSD (Supervised) is active with a destination entered into the navigation display, the vehicle takes care of all navigation control to reach that destination.

The vehicle “nags” you every minute or so to apply some steering resistance to verify you are actively “supervising” FSD so you can intervene, if needed, to reduce the risk of an accident. However, v12.4.2 eliminates these “nags” by using the camera inside the vehicle to monitor the driver’s attention on the road to verify active “supervision”. The “nags” are still used if the camera cannot monitor the driver’s eyes due to sunglasses or wearing a hat with a visor.

In this Update, I will cover:

March of 9s

My Tesla FSD (Supervised) v12 experience

Tesla FSD technology enables more advanced robotics

I will then wrap up with Some Final Thoughts.

1. March of 9s

Tesla continues to scale its computing capabilities to train its neural network models for autonomous navigation. The company uses real-world video captured with driving simulations, professional drivers, shadow-mode with manual driving, and interventions to disengage FSD (Supervised) across the Tesla fleet. Tesla has reported completing 1.2 billion miles driven with FSD in its vehicles through April 2024.

Tesla has reportedly installed 35,000 Nvidia H100 GPUs to support AI training in addition to prior AI computing capacity including its Dojo supercomputer with its own proprietary Dojo semiconductor technology. Tesla improved in-vehicle inference computing with the release of Hardware 4 for the refreshed Model S and Model Y vehicles with higher resolution cameras in January 2023.

Hardware 5 with additional improvements is scheduled for release around January 2026. FSD does not optimize performance improvements to-date with Hardware 3- and 4-specific neural networks, but Tesla is deploying a new supercomputer at Giga Texas later this year to train models specific to Hardware 4. This will likely unlock additional performance with the advanced technology in Hardware 4.

To summarize the core levers Tesla has to improve the FSD technology stack, it can:

Train, test, and deploy more advanced neural networks to the Tesla fleet

This requires keep compute capabilities current with the top supercomputing clusters in the world to continuoulsy train more advanced neural networks

This requires building and maintaining expanding databases of driving data to train more advanced neural networks

This requires designing the most advanced neural network architectures specifically for autonomous navigation

This requires designing the most advanced workflows to train, verfiy and validate neural network models against prior models using huge databases of driving data and the most advanced supercomputer technology

This also requires deploying production-ready, version-controlled neural networks to the Tesla fleet

Design, build, test, and deploy more advanced in-vehicle hardware and software

This requires deploying the latest sensor technology to acquire and process 360 degree high-speed video with multiple high-fidelity in-vehicle cameras and driving data

This requires the latest semiconductor technology for in-vehicle inference computing to operate the neural network

This requires the latest in-vehicle computer hardware to operate inference computing to control steering, accelerating, and braking the vehicle

This also requires requesting, purchasing (if needed), and receiving over-the-air updates of version-specific neural network models to the vehicle

Acquire video at scale with specific in-vehicle hardware and neural network model version to build edge cases for training and to evaluate quality and safety

This requires acquiring driving data in shadow-mode while the vehicle is being driven manually

This requires automatically uploading driving data with an intervention to disengage FSD and the human operator’s voice-feedback about why they disengaged FSD

This also requires monitoring each vehicle mile driven with FSD and accidents when they occur including the driving data

Key vehicle safety outcomes report the number of fatalities per 100 million miles driven. When the collision rate is less than 1.0 for 1 million miles driven, the miles driven without a collision would be at least 999,999 miles per 1 million miles, or six 9s. As the collision rate decreases, safety improves with the March of 9s. Recent 2022 driving statistics from the U.S. Federal Highway Administration reported 1.3 fatalities per 100 million annual vehicle miles traveled.

The National Highway Traffic Safety Administration (NHTSA) reported there were 1.5 vehicle crashes per 1 million miles driven in 2022 across the Total U.S. Vehicle Fleet. Tesla’s 2022 Impact Report highlighted Tesla vehicles had only 0.31 vehicle crashes per 1 million miles driven with FSD (beta) engaged mostly on non-highway miles and 0.18 vehicle crashes per 1 million miles driven with autopilot engaged mostly on highway miles.

Elon Musk has stated since 2018 that 6 billion miles driven with Tesla FSD are needed to train Tesla FSD to reach adequate safety for full automonomy. It is estimated this would also enable the robotaxi concept without a human driver when the safety is at least 10 times greater than with a human operator. And recent data show the collision rate is actually increasing with human operators who are increasingly more distracted with smartphones in addition to driving while under the influence of alcohol or drugs.

2. My Tesla FSD (Supervised) v12 experience

I would estimate I have only driven about 400 miles starting with FSD (Supervised) v11.4 through FSD (Supervised) v12.3.6 so far this year. The improvements with new releases have usually been dramatic. You need some experience using FSD to learn about its tendencies and when it is not decisive when approaching a scenario. The steering wheel jitters a bit if FSD is modifying its path. This doesn’t mean you need to intervene, but you should be ready to intervene if needed.

Signage defects

A noticeable issue when I first started using FSD was how a twisted yield or stop sign for a merging lane could be mistaken as a traffic sign for the primary road with the right-of-way. There is one of these driving westbound on Route 9 through Newton, MA. Without moving traffic in front of my vehicle as a cue, FSD (Supervised) v11.4 would slow down almost to a stop to apparently yield even though I had the right-of-way.

This issue has improved significantly maybe due to repeated operator feedback at a specific location, but does indicate how defects with lane markings, signal lights, and traffic signs can impact autonomous navigation when computer vision by the vehicle is the primary mode of action. This supplemental highway infrastructure will need to meet adequate quality standards to support wide use of autonomous navigation. However, vehicles using autonomous navigation will be able to identify and communicate these defects.

Roundabouts

Another issue in New England is noticeable with some roundabouts where the vehicle enters somewhat blind to the presence of a roundabout that requires yielding to roundabout traffic. Since traffic within a roundabout can move quickly in New England, yielding to this traffic to enter the roundabout is critical. This requires adequate signage to identify the approaching roundabout and warn to adequately yield.

Passing Lane

And when navigating a multi-lane highway with busy traffic, FSD (Supervised) v12.3.6 tends to stay in the passing lane for too long when it could change lanes to allow faster moving traffic to pass in the passing lane. Safety is key and the vehicle is just waiting for clear passage to change lanes.

Traffic lights transitioning to yellow

And when approaching a traffic light that turns yellow, sometimes FSD (Supervised) will stop more quickly anticipating red than I would have if driving manually. Tesla is certainly opting for safety. This could improve in the future with more infrastructure improvements to enable traffic lights to broadcast their status as maybe a count-down to yellow so approaching vehicles could start to slow down if it is estimated they could not clear the intersection before yellow turns to red.

3. Tesla FSD technology enables more advanced robotics

Tesla presented the concept of Tesla owners deploying their vehicle to a fleet of robotaxis in the 2016 Master Plan, Part Deux. These robotaxis are hailed like an Uber or Lyft vehicle, but without the need for human operator. This reduces the cost significantly for ride-sharing to scale point-to-point transportation in urban areas. The same mobile app could be used to deploy a Tesla EV into and out of the robotaxi fleet and request a ride from a location to another location.

The biggest hurdle for the robotaxi concept is the regulatory hurdle to operate a fully autonomous vehicle without a human operator. Other companies like Cruise and Waymo have outfitted cars with additional sensing capabilities like lidar at significant costs to enable their own robotaxi concept for company-owned fleets in specific zones of a few selected cities including San Francisco where they have the regulatory approval to do so. Vehicles can start the robotaxi concept with a human operator while verifying safety outcomes, but a true robotaxi vehicle would not have a steering wheel or any controls for a human operator to save costs.

Tesla is expected to reveal a new vehicle designed to operate only as a robotaxi on August 8th. This vehicle will need Tesla’s FSD technology to advance to a state that enables the regulatory approval to operate such a vehicle without a human operator. Building and verifying the robotaxi vehicle production line, manufacturing an initial fleet of robotaxis and continuing to advance the FSD technology can all happen in parallel for a few years and then merge to realize the deliverable concept with regulatory approval starting in specific markets in China and/or the U.S.

Tesla is also advancing its Optimus humanoid robot program using FSD technology. Cameras for image and video acquisition plus the FSD semiconductor chip and FSD computer for inference computing are being used for autonomous operation of this robot, too. The computer vision will likely share core technology between robotaxis and humanoid robots, but also diverge with optimized technology for each use case. However, having this core technology in-house along with the ability to acquire video and train neural networks gives Tesla a huge advantage for both the robotaxi and humanoid robots.

Tesla may eventually manufacture robotaxis with the assistance of humanoid robots and operate these vehicles without a human operator to reduce costs and maximize profits. Labor is a key input cost for manufacturing. If labor costs are managed with scaled production, economic growth is more sustainable. Tesla is all-in to advance and deploy artificial intelligence through neural networks and robotics.

Some Final Thoughts

I will continue to use Tesla FSD (Supervised) to help evaluate its pace of innovation for autonomous navigation. I will monitor how Tesla addresses the issues I identified above and any others I notice in the future. However, most trips with FSD (Supervised) v12.3.6 do not require any interventions until the destination is reached. That means all turns, stopping for traffic, lane changes, etc. happen automatically while I only supervise.

I look forward to using v12.4.2 with hands free driving as long as I keep supervising with my own vision and attention on the road ahead. I have a few extended road trips ahead to thoroughly evaluate FSD (Supervised) on interstate highways where the need to intervene is less common.

I also look forward to the anticipated integration of xAI’s Grok into Tesla FSD (Supervised) for voice-based prompts. These prompts would not be interventions per se, but enhancements to the experience to maybe request a lane change if it is safe to do so or an alternate path. You could also ask Grok about options for food or things to see while driving and add the destination if you choose to do so. This would provide hands-free search for information, chat, and autonomous navigation as part of Tesla FSD (Supervised) while driving. Welcome to KITT and Knight Rider in a Tesla.

Best,

Stephen

I’m long TSLA mentioned in this post. Nothing in this Update is intended to serve as financial advice. Do your own research. The opinions and views expressed in this newsletter are those of the author. They do not purport to reflect the opinions, views or policies of any other organization, company or employer.